It has always been the mission of R developers to connect R to the “good stuff”. As John Chambers puts it in his book Extending R:

One of the attractions of R has always been the ability to compute an interesting result quickly. A key motivation for the original S remains as important now: to give easy access to the best computations for understanding data.

From the day it was announced a little over two years ago, it was clear that Google’s TensorFlow platform for Deep Learning is good stuff. This September (see announcment), J.J. Allaire, François Chollet, and the other authors of the keras package delivered on R’s “easy access to the best” mission in a big way. Data scientists can now build very sophisticated Deep Learning models from an R session while maintaining the flow that R users expect. The strategy that made this happen seems to have been straightforward. But, the smooth experience of using the Keras API indicates inspired programming all the way along the chain from TensorFlow to R.

The Keras Strategy

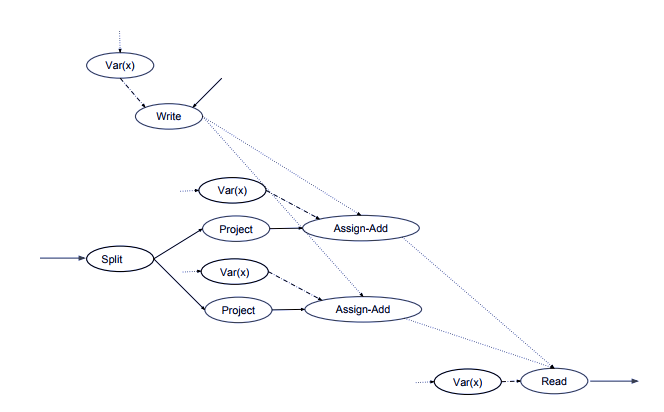

TensorFlow itself is implemented as a Data Flow Language on a directed graph. Operations are implemented as nodes on the graph and the data, multi-dimensional arrays called “tensors”, flow over the graph as directed by control signals. An overview and some of the details of how this all happens is lucidly described in a paper by Abadi, Isard and Murry of the Google Brain Team,

and even more details and some fascinating history are contained in Peter Goldsborough’s paper, A Tour of TensorFlow.

This kind of programming will probably strike most R users as being exotic and obscure, but my guess is that because of the long history of dataflow programming and parallel computing, it was an obvious choice for the Google computer scientists who were tasked to develop a platform flexible enough to implement arbitrary algorithms, work with extremely large data sets, and be easily implementable on any kind of distributed hardware including GPUs, CPUs, and mobile devices.

The TensorFlow operations are written in C++, CUDA, Eigen, and other low-level languages optimized for different operation. Users don’t directly program TensorFlow at this level. Instead, they assemble flow graphs or algorithms using a higher-level language, most commonly Python, that accesses the elementary building blocks through an API.

The keras R package wraps the Keras Python Library that was expressly built for developing Deep Learning Models. It supports convolutional networks (for computer vision), recurrent networks (for sequence processing), and any combination of both, as well as arbitrary network architectures: multi-input or multi-output models, layer sharing, model sharing, etc. (It should be pretty clear that the Python code that makes this all happen counts as good stuff too.)

Getting Started with Keras and TensorFlow

Setting up the whole shebang on your local machine couldn’t be simpler, just three lines of code:

install.packages("keras")

library(keras)

install_keras()Just install and load the keras R package and then run the keras::install_keras() function, which installs TensorFlow, Python and everything else you need including a Virtualenv or Conda environment. It just works! For instructions on installing Keras and TensorFLow on GPUs, look here.

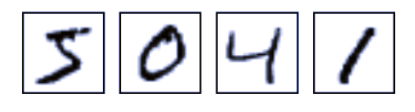

That’s it; just a few minutes and you are ready to start a hands-on exploration of the extensive documentation on the RStudio’s TensorFlow webpage tensorflow.rstudio.com, or jump right in and build a Deep Learning model to classify the hand-written numerals using

MNIST data set which comes with the keras package, or any one of the other twenty-five pre-built examples.

Beyond Deep Learning

Being able to build production-level Deep Learning applications from R is important, but Deep Learning is not the answer to everything, and TensorFlow is bigger than Deep Learning. The really big ideas around TensorFlow are: (1) TensorFlow is a general-purpose platform for building large, distributed applications on a wide range of cluster architectures, and (2) while data flow programming takes some getting used to, TensorFlow was designed for algorithm development with big data.

Two additional R packages make general modeling and algorithm development in TensorFlow accessible to R users.

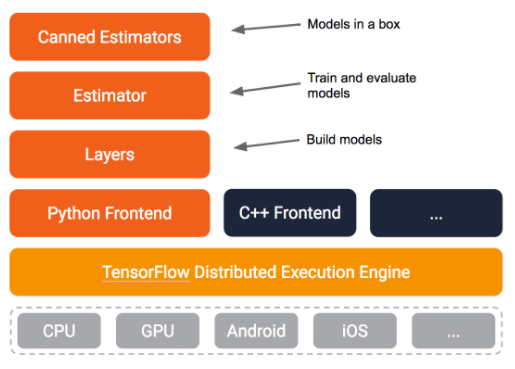

The tfestimators package, currently on GitHub, provides an interface to Google’s Estimators API, which provides access to pre-built TensorFlow models including SVM’s, Random Forests and KMeans. The architecture of the API looks something like this:

There are several layers in the stack, but execution on the small models I am running locally goes quickly. Look here for documentation and sample models that you can run yourself.

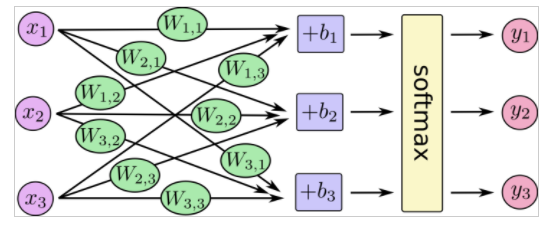

At the deepest level, the tensorflow package provides an interface to the core TensorFlow API, which comprises a set of Python modules that enable constructing and executing TensorFlow graphs. The documentation on the package’s webpage is impressive, containing tutorials for different levels of expertise, several examples, and references for further reading. The MNIST for ML Beginners tutorial works through the classification problem described above in terms of the Keras interface at a low level that works through the details of a softmax regression.

While Deep Learning is sure to capture most of the R to TensorFlow attention in the near term, I think having easy access to a big league computational platform will turn out to be the most important benefit to R users in the long run.

As a final thought, I am very much enjoying reading the MEAP from the forthcoming Manning Book, Deep Learning with R by François Chollet, the creator of Keras, and J.J. Allaire. It is a really good read, masterfully balancing theory and hands-on practice, that ought to be helpful to anyone interested in Deep Learning and TensorFlow.

You may leave a comment below or discuss the post in the forum community.rstudio.com.