A few years ago, when I first became aware of Topological Data Analysis (TDA), I was really excited by the possibility that the elegant theorems of Algebraic Topology could provide some new insights into the practical problems of data analysis. But time has passed, and the sober assessment of Larry Wasserman seems to describe where things stand.

TDA is an exciting area and is full of interesting ideas. But so far, it has had little impact on data analysis.

Nevertheless, TDA researchers have been quietly working the problem and at least some of them are using R (see below). Since I first read Professor Wasserman’s paper, I have been very keen on getting the perspective of a TDA researcher. So, I am delighted to present the following interview with Noah Giansiracusa, Algebraic Geometer, TDA researcher and co-author of a recent JCGS paper on a new visualization tool for persistent homology.

Q: Hello Dr. Giansiracusa. Thank you for making time for us at R Views. How did you get interested in TDA?

While doing a postdoc in pure mathematics (algebraic geometry, specifically) I, probably like many people, could not escape a feeling that crept up from time to time—particularly during the more challenging moments of research frustration—that perhaps the efforts I was putting into proving abstract theorems might have been better spent working in a more practical, applied realm of mathematics. However, pragmatic considerations made me apprehensive at that point in my career to take a sudden departure, for I finally felt like I was gaining some momentum in algebraic geometry, developing a nice network of supportive colleagues, etc., and also that I would very soon be on the tenure track job market and I knew that if I was hired (a big “if”!) it would be for a subject I had actually published in, not one I was merely curious about or had recently moved into. But around this same time I kept hearing about an exciting but possibly over-hyped topic called topological data analysis: TDA. It really seemed to be in the air at the time (this was about five years ago).

Q: Why do you think TDA took off?

I can only speak for myself, but I think there were two big reasons that TDA generated so much buzz among mathematicians at the early stages.

First, it was then, and still is impossible to escape the media blitz on “big data” and the “data revolution” and related sentiments. This is felt strongly within academic circles (our deans would love us all to be working in data it seems!) but also in the mainstream press. Yet, I think pure mathematicians often felt somewhat on the periphery of this revolution: we knew that the modern developments in data and deep learning and artificial intelligence would not be possible without the rigorous foundations our mathematical ancestors had laid, but we also knew that most of the theorems we are currently proving would in all likelihood play absolutely zero role in any of the contemporary story. TDA provided a hope for relevance, that in the end the pure mathematician would come out of the shadows of obscurity and strike a data science victory proving our ineluctable relevance and superiority in all things technical—and this hope quickly turned to hype.

I think I and many other pure mathematicians were rooting for TDA, to show the world that our work has value. We tired of telling stories of how mathematicians invented differential geometry before Einstein used it in relativity and your GPS would not be possible without this. We needed a more fresh, decisive victory in the intellectual landscape; number theory used in cryptography is great, but still too specialized: TDA had the promise of bringing us into the (big) data revolution. And so we hoped, and we hyped.

And second, from a very practical perspective, I simply did not have time to retrain myself in applied math, the usual form of applied math based heavily on differential equations, modeling, numerical analysis, etc. But TDA seemed to offer a chance to gently transition to data science mathematical relevance—instead of starting from scratch, pure mathematicians such as myself would simply need to add one more chapter to our background in topics like algebraic topology and then we’d be ready to go and could brand ourselves as useful! And if academia didn’t work out, Google would surely rather open the doors of employment to a TDA expert than to a traditional algebraic topologist (or algebraic geometer, in my case).

I think these are two of the main things that brought TDA so much early attention before it really had many real-world successes under its belt, and they are certainly what brought me to it; well, that and also an innocent curiosity to understand what TDA really is, how it works, and whether or not it does what it claims.

Q: So how did you get started?

I first dipped my toes in the TDA waters by agreeing to do a reading course with a couple undergraduates interested in the topic; then I led an undergraduate/master’s level course where we studied the basics of persistent homology, downloaded some data sets, and played around. We chose to use R for that since there are many data sets readily available, and also because we wanted to do some simple experiments like sampling points from nice objects like a sphere but then adding noise, so we knew we wanted to have a lot of statistical functions available to us and R had that plus TDA packages already. While doing this I grew to quite like R and so have stuck with it ever since. In fact, I’m now using it also on a (non-TDA) project to analyze Supreme Court voting patterns from a computational geometry perspective.

Q: Do you think TDA might become a practical tool for statisticians?

First of all, I think this is absolutely the correct way to phrase the question! A few years ago TDA seemed to have almost an adversarial nature to it, that topologists were going to do what data scientists were doing but better because fancy math and smart people were involved. So the question at the time seemed to be whether TDA would supplant other forms of data science, and this was a very unfortunate way to view things.

But, it was easy to entirely discredit TDA by saying that it makes no practical difference whether your data has the homology of a Klein bottle, or there were no real-world examples where TDA had outperformed machine learning. This type of dialogue was missing the point. As your question suggests, TDA should be viewed as a tool to be added to the quiver of data science arrows, rather than an entirely new weapon.

In fact, while this clarification moves the dialogue in a healthy direction (TDA and machine learning should work together, rather than compete with each other!) I think there’s still one further step we should take here: TDA is not really a well-defined entity. For instance, when I see topics like random decision forests, it looks very much like topology to me! (Any graph, of which a tree is an example, is a 1-dimensional simplicial complex, and actually if you look under the hood, the standard Rips complex approach in TDA builds its higher dimensional simplicial complexes out of a 1-dimensional simplicial complex, so both random forests and TDA—and most of network theory—are really rooted in the common world of graph theory.)

Another example: the 0-dimensional barcode for the Rips flavor of TDA encodes essentially the same information as hierarchical clustering. All I’m saying here is that there’s more traditional data science in TDA than one might first imagine, and there’s more topology in traditional data science than one might realize. I think this is healthy, to recognize connections like these—it helps one see a continuum of intellectual development here rather than a discrete jump from ordinary data science to fancy topological data science.

That’s a long-winded way of saying that you phrased the question well. The (less long-winded) answer to the question is: Yes! Once one sees TDA as one more tool for extracting structure and statistics from data, it is much easier to imagine it being absorbed into the mainstream. It need not outperform all previous methods or revolutionize data science, it merely needs to be, exactly as you worded it, a practical tool. Data science is replete with tools that apply in some settings and not others, work better with some data than others, reveal relevant information sometimes more than others, and TDA (whatever it is exactly) fits right into this. There certainly will be some branches of TDA that gain more traction over the years than others, but I am absolutely convinced that at least some of the methods used in TDA will be absorbed into statistical learning just as things like random decision trees and SVMs (both of which have very topological/geometric flavors to them!) have. This does not mean that every statistician needs to learn TDA, just as not every statistician needs to learn all the latest methods in deep learning.

Q: Where do you think TDA has had the most success?

Over the past few years I think the biggest strides TDA has made have been in terms of better interweaving it with other methods and disciplines—so big topics with lots of progress but still room for more have included confidence intervals, distributions of barcodes, feature selection and kernel methods in persistent homology. These are all exciting topics and healthy for the long-term development of TDA.

I think, perhaps controversially, the next step might actually be to rid ourselves of the label TDA. For one thing, TDA is very geometric and not just topological (which is to say: distances matter!). But the bigger issue for me is that we should refer to the actual tools being used (mapper, persistent homology in its various flavors, etc.) rather than lump them arbitrarily together under this common label. It took many years for statisticians to jump on the machine learning bandwagon, and part of what prevented them from doing so sooner was language; the field of statistical learning essentially translates machine learning into more familiar statistical terminology and reveals that it is just another branch of the same discipline. I suspect something similar will take place with TDA… er, I should say, with these recent topological and geometric data analysis tools.

Q: Do you think focusing on the kinds of concrete problems faced when trying to apply topological and algebraic ideas to data analysis will turn out to be a productive means of motivating mathematical research?

Yes, absolutely—and this is also a great question and a healthy way to look at things! Pure mathematicians have no particular agenda or preconceived notion of what they should and should not be studying: pure mathematics, broadly speaking, is logical exploration and development of structure and symmetry. The more intricate a structure appears to be, and the more connected to other structures we have studied, the more interested we tend to be in it. But that really is pretty much all we need to be interested—and to be happy.

So TDA provides a whole range of new questions we can ask, and new structures we can uncover, and inevitably many of these will tie back to earlier areas of pure mathematics in fascinating ways—all the while, throughout these explorations pure mathematicians likely will end up laying foundations that help provide a stable scaffolding for the adventurous data practitioners who jump into methodology before the full mathematical landscape has been revealed. So TDA absolutely will lead to new, important mathematical research: important both because we’ll uncover beautiful structures and connections, and important also because it will provide some certainty to the applied methods build on top of this.

Q: More specifically, what role might the R language play in facilitating the practice or teaching of mathematics?

Broadly speaking, I think many teachers—especially in pure mathematics—undervalue the importance of computer programming skills, though this is starting to change as pure mathematicians increasingly enjoy experimentation as a way of exploring small examples, honing intuition, and finding evidence for conjectures. While the idea of theorem-proof mathematics is certainly the staple of our discipline, it’s not the only way to understand mathematical structure. In fact, students often find mathematical material resonates with them much more strongly if they uncover a pattern by experimenting on a computer rather than just being fed it through lecture or textbooks. Concretely, if students play with something like the distribution of prime numbers, they might get excited to see the fascinating interplay between randomness and structure that emerges, and that can better prepare them to appreciate formally learning the prime number theorem in a classroom. So as things like TDA emerge, the number of pure mathematics topics that can be explored on a computer increases, and I think that’s a great thing.

Q: Where does R fit in?

Well, much of the mathematical exploration I’m referring to here is symbolic—so very precise and algebraic flavor—and R certainly has no limitations working precisely, but it’s not the main goal of the language so one likely would use a computer algebra system instead. But, one exciting thing TDA does help us see is that there’s a marvelous interface between the symbolic and numerical worlds (here represented by the topology and the statistics, respectively) and I think this is great for both teaching and research. The more common ground we find between topics that previously seemed quite disparate, the more chance we have of building meaningful interdisciplinary collaborations, the more perspectives we can provide our students to motivate and study something, and the more we see unity within mathematics. My favorite manifestation of this is that TDA is the study of the topology of discrete spaces—but discrete spaces have no non-trivial topology! What’s really going on then is that data gives us a discrete glimpse into a continuous, highly structured world, and TDA aims to restore the geometric structure lost due to sampling. In doing so one cannot, and should not, avoid statistics, so pure mathematics is brought meaningfully in contact with statistics and I absolutely love that. This means the R language finds a role in pure math where it may previously not have: topology with noise, algebra with uncertainty.

Thank you again! I think your ideas are going to inspire some R Views readers.

Editors note: here are some R packages for doing TDA:

* TDA contains tools for the statistical analysis of persistent homology and for density clustering.

* TDAmapper enables TDA using Discrete Morse Theory.

* TDAstats offers a tool set for TDA including for calculating persistent homology in a Vietoris-Rips complex.

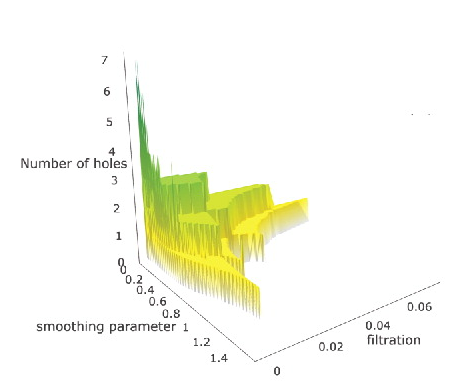

* pterrace builds on TDA and offers a new multi-scale and parameter free summary plot for studying topological signals.

You may leave a comment below or discuss the post in the forum community.rstudio.com.