Any programming environment should be optimized for its task, and not all tasks are alike. For example, if you are exploring uncharted mountain ranges, the portability of a tent is essential. However, when building a house to weather hurricanes, investing in a strong foundation is important. Similarly, when beginning a new data science programming project, it is prudent to assess how much effort should be put into ensuring the code is reproducible.

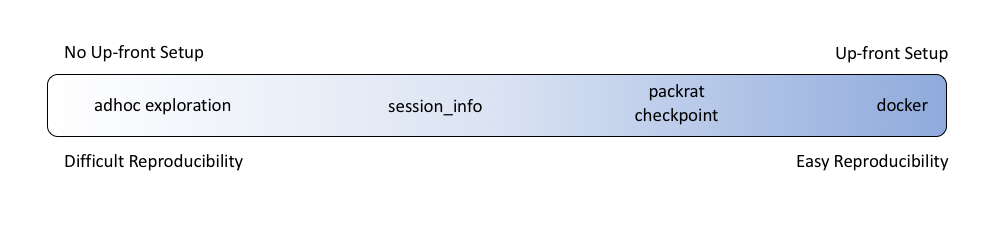

Note that it is certainly possible to go back later and “shore up” the reproducibility of a project where it is weak. This is often the case when an “ad-hoc” project becomes an important production analysis. However, the first step in starting a project is to make a decision regarding the trade-off between the amount of time to set up the project and the probability that the project will need to be reproducible in arbitrary environments.

Challenges

It is important to understand the reasons that reproducible programming is challenging. Once programming practices and external data are taken into account, the primary difficulty is dependency management over time. Dependency management is important because dependencies are so essential to R development. R has a fast-moving community and many extremely valuable packages to make your work more effective and efficient.

You will typically want to ensure that you are using recent versions of packages for a new project. By extension, this will require a recent operating system and a recent version of R.

The best place to start is with a recent operating system and a recent version of R

Typically, this equates to upgrading R to the latest version once or twice per year, and upgrading your operating system to a new major version every two to three years.

Despite the upsides of a vibrant package ecosystem, R programmers are familiar with the pain that can come with the many (very useful) packages that change, break, and are deprecated over time. Good dependency management ensures your project can be recomputed again in another time or another place.

Solutions

R package management is where most reproducibility decision-making needs to happen, although we will mention system dependencies shortly. CRAN archives source code for all versions of R packages, past and present. As a result, it is always possible to rebuild from source for package versions that you used to build an analysis (even on different operating systems). How you keep track of the dependencies that you used will establish how reproducible your analysis is. As we indicated before, there is a spectrum along which you might fall.

Ignoring Reproducibility

There are occasionally times of rapid exploration where the simplest solution is to ignore reproducibility.

Many R developers opt for a single massive system library of R packages and no record of what packages they used for an analysis. It is still recommended to use “RStudio Projects”, if you are using the RStudio IDE, and version control code in git or some other version-control system. This approach is optimal for exploring because it involves almost no setup, and gets the programmer into the problem immediately.

However, even with code version control, it can be very challenging to reproduce a result without documentation of the package versions that were in use when the code was checked in. Further, if one project updates a package that another project was using, it is possible to have the two projects conflict on version dependencies, and one or both can break.

When exploration begins to stabilize, it is best to establish a reproducible environment. You can always capture dependencies at a given time with sessionInfo() or devtools::session_info, but this does not facilitate easily rebuilding your dependency tree.

Tracking Package Dependencies per Project

Tracking dependencies per project isolates package versions at a project level and avoids using the system library. packrat and checkpoint/MRAN both take this approach, so we will discuss each separately.

Programmers in other languages will be familiar with

packrat’s approach to storing the exact versions of packages that the project uses in a text file (packrat.lock). It works for CRAN, GitHub, and local packages, and provides a high level of reproducibility. However, a fair amount of time is spent building packages from source, re-installing packages into the local project’s folder, and downloading the source code for packages. Fortunately,packrathas a “global cache” that can speed things up by symlinking package versions that have been installed elsewhere on the system.MRANandcheckpointalso take the library-per-project approach, but focus on CRAN packages and determine dependencies based on the “snapshot” of CRAN that Microsoft stored on a given day. The programmer need only store the “checkpoint” day they are referencing to keep up with package versions.

Both packages leverage up-front work to make reproducing an analysis quite straightforward later, but it is worth noting the differences between them.

packratkeeps tabs on the packages installed in your project folder and presumes that they form a complete, working, and self-consistent whole. It also downloads package sources to your computer for future re-compiling, if necessary.checkpointchooses package versions based on a given day in MRAN history. This presumes that all the package versions you need are on that day, and that CRAN had a self-consistent system that day. If you want to update a package, you will need to choose a date in the future and re-install all packages to be sure that none of them break.

Tracking All Dependencies per Project

When it comes to other system libraries or dependencies, containers are one of the most popular solutions for reproducibility. Containers behave like lightweight virtual machines, and are more fitting for reproducible data science. To give containers a shot, you can install docker and then take a look at the rocker project (R on docker).

At a high level, Docker saves a snapshot called an “image” that includes all of the software necessary to complete a task. A running “image” is called a “container.” These images are extensible, so that you can more easily build an image that has the dependencies you need for a given project. For instance, to use the tidyverse, you might execute the following:

docker pull rocker/tidyverse

docker run -d --name=my-r-container -p 8787:8787 rocker/tidyverse

You can then get an interactive terminal with docker exec -it my-r-container bash, or open RStudio in the browser by going to localhost:8787 and authenticating with user:pass rstudio:rstudio.

It is important to consider the difficulty of maintaining package dependencies within the image. If your Dockerfile installs packages from CRAN or GitHub, the regeneration of your image will still be susceptible to changes in the published version of a package. As a result, it is advisable to pair up packrat with Docker for complete dependency management.

A simple Dockerfile like the following will copy the current project folder into the rstudio user’s home (within the container) and install the necessary dependencies using packrat. It requires using packrat for the project.

FROM rocker/rstudio

# install packrat

RUN R -e 'install.packages("packrat", repos="http://cran.rstudio.com", dependencies=TRUE, lib="/usr/local/lib/R/site-library");'

USER rstudio

# copy lock file & install deps

COPY --chown=rstudio:rstudio packrat/packrat.* /home/rstudio/project/packrat/

RUN R -e 'packrat::restore(project="/home/rstudio/project");'

# copy the rest of the directory

# .dockerignore can ignore some files/folders if desirable

COPY --chown=rstudio:rstudio . /home/rstudio/project

USER root

Then the following will get your image started, much like the tidyverse example above.

docker build --tag=my-test-image .

docker run --rm -d --name=my-test-container -p 8787:8787 my-test-image

Note that doing more complex work typically involves a bit of foresight, familiarity with design conventions, and the creation of a custom Dockerfile. However, this up-front work is rewarded by a full operating-system snapshot, including all system and package dependencies. As a result, Docker provides optimal reproducibility for an analysis.

How certain do you need to be that your code is reproducible?

It is necessary and increasingly popular to start thinking about notebooks when discussing reproducibility. However, if the aim is to recompute results in another time or place, we cannot stop there.

When it comes to the management of packages and other system dependencies, you will need to decide whether you want to spend more time setting up a reproducible environment, or if you want to start exploring immediately. Whether you are putting up a tent for the night or building a house that future generations will enjoy, there are plenty of tools to help you on your way and assist you if you ever need to change course.

In future posts, I hope to explore additional aspects of reproducibility.

You may leave a comment below or discuss the post in the forum community.rstudio.com.